Creating a Short Film with Machine Learning

Hello everyone! My name is Kira. I am a filmmaker and multidisciplinary artist. I am drawn towards exploring perceptions of reality within my projects. This past month I took part in the virtual Runway Flash Residency. Runway ML is an incredibly cool application that makes machine learning more easily accessible for creatives.

During the residency I decided to make a film that was made up entirely of machine learned elements: machine learned sets, characters, textures, etc. I was very curious to see what would unfold…

Conceptually, I chose to explore variations of “identity.” Lessons From My Nightmares follows the story of a girl dealing with insomnia due to compartmentalized thoughts and feelings. When she does sleep, she is haunted by nightmares. It is within these nightmares that her repressed parts of self try to communicate with her. In the day she has a “projected self” — i.e.: the self we show to the world. Only through allowing her feelings to be expressed can she synthesize her many selves and finally have peace of mind… and finally get some sleep.

In this post I will discuss the steps I went through to create this film using Runway and After Effects.

The Film: Lessons From My Nightmares

Training Models

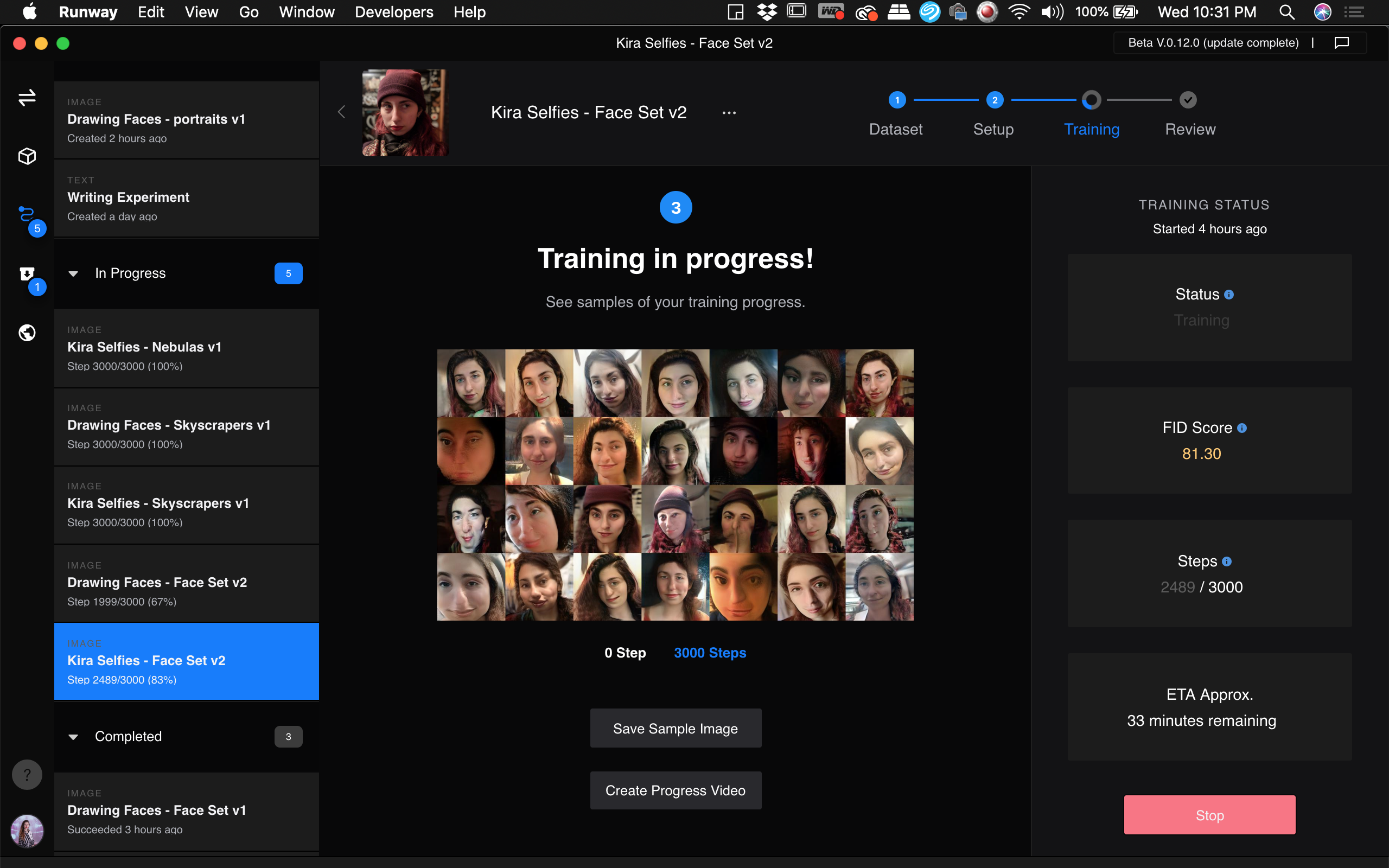

I began the residency first experimenting by training models. I had four main datasets I experimented with:

- Selfies of myself (~150 pictures)

- Pictures of my film sets + film locations (~200 pictures)

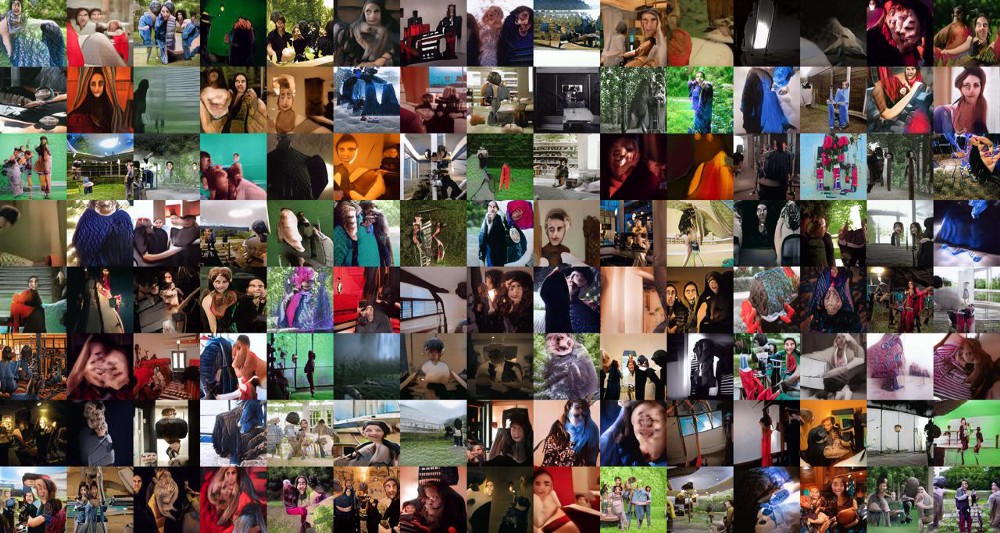

- Behind the scenes on set photos from my film productions over the past 6 years (~4,600)

- Drawings I’ve made (~100 pictures)

I ended up using elements from all of the models except for the ones utilizing my drawings. I may save that for a future project — it just didn’t seem to fit into this film. I will not go into much more detail about the results of the drawings in this post.

Variations on Training

When training the models I experimented with training the same datasets off of different pretrained models and varying numbers of steps. Here are the results of a few of those variations:

I trained the selfies off of the pretrained models of faces, skyscrapers and nebulas all with 3,000 steps. The faces set produced the highest quality imagery (the files are 1000x1000 pixels versus 500x500 or 250x250). The results were the most strange and disturbing — my face realistically contorted in unexpected ways. The nebulas set produced disintegrated looking versions of my face. Surprisingly, training the selfies off of the skyscraper model produced results that looked more like the real me, but in a more painterly & artistic style.

I trained both the behind the scenes photos as well as film sets photos using the pretrained models of faces and bedrooms. In both cases, training off of the faces set produced beautiful and abstract textures and shapes. I was very surprised by the results of training the behind the scenes photos off of the bedroom model: surreal and eerie faces appear in strange scenes like a fever dream. The oddest part: I can somewhat recognize these ‘memories’ but they are abstract enough that I can not quite fully remember when or where or what… but I feel a connection to what I am seeing.

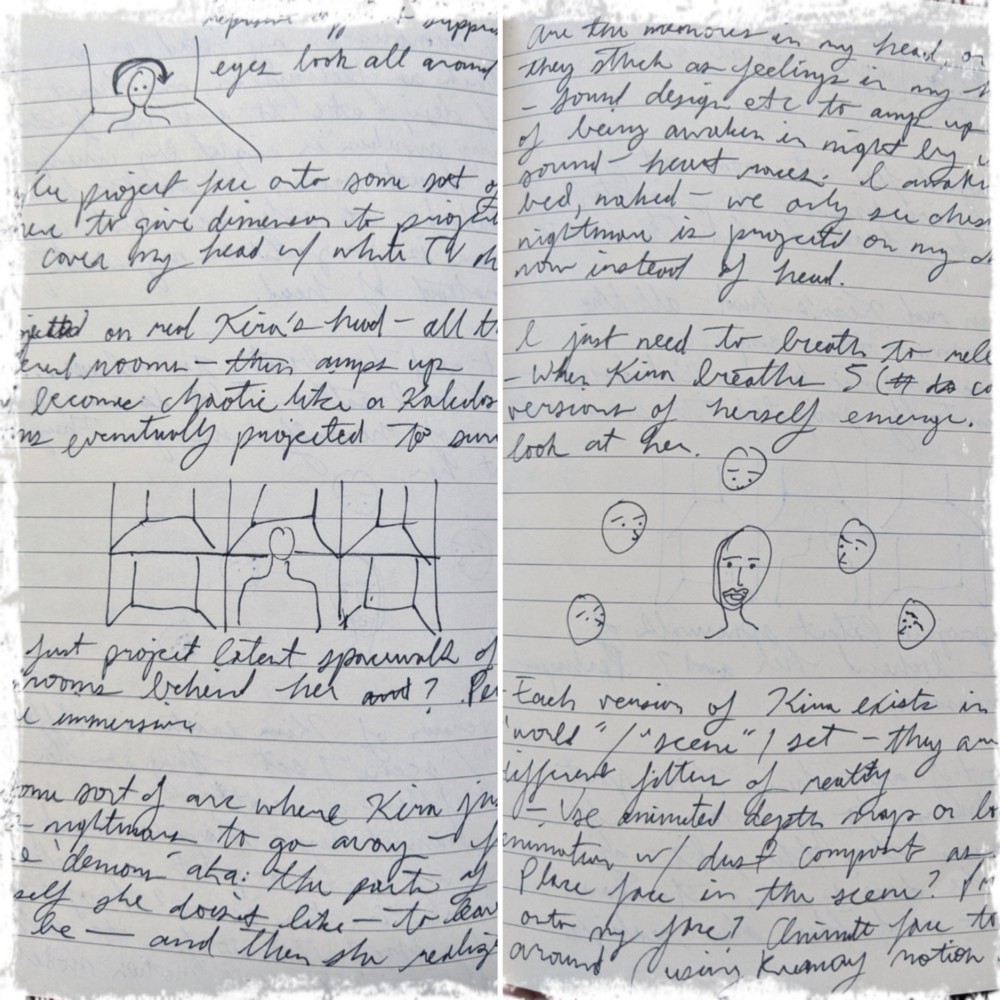

Film Concept

After these initial model training experiments, the general film concept came to me through a meditation and then creative stream of consciousness writing in my notebook. The selfies trained off of the skyscraper model portrayed my projected self — the ‘pleasant’ version of myself that I show to the world. The selfies trained off of the faces model portrayed the more chaotic, emotional, forever-changing self that was hidden away.

Through simply breathing and allowing my emotions to be as they are, I could finally process them. I had to experience the feelings in order for them to be freed.

Through simply breathing and allowing my emotions to be as they are, I could finally process them. I had to experience the feelings in order for them to be freed.

I would portray the effects of suppressed compartmentalized feelings through the bookend narrative visuals of sleep: in the beginning I am struggling with insomnia… and when I do sleep, I am haunted by nightmares. In the end I finally fall asleep with ease… and I dream of nothing.

In my notebook I brainstormed scenes and sketched out potential visuals to composite.

Other Elements & Models

Within my film I utilized latent spacewalks and generated images from my models. I then took these elements and ran them through a few different models within Runway. The main models I used: Midas, MASKRCNN, First Order Motion Model and Liquid Warping GAN.

- I ran the bedroom-trained latent spacewalks through the Midas model to generate depth maps.

- I ran various generated faces through MASKRCNN to mask out the backgrounds. Next I ran those masked faces through First Order Motion Model to bring the faces to life for the different scenes. I filmed reference videos on my phone of my face expressing different emotions and moving in different directions to base the facial movements off of.

- In Photoshop I created a (very ugly!) character using elements generated by the models I trained. I ran the character through Liquid Warping GAN to generate an animated walking cycle based off of a video clip of someone walking that I sourced off of YouTube. In After Effects I later motion tracked on one of the First Order Motion faces to this body.

Compositing in After Effects

For the nightmares:

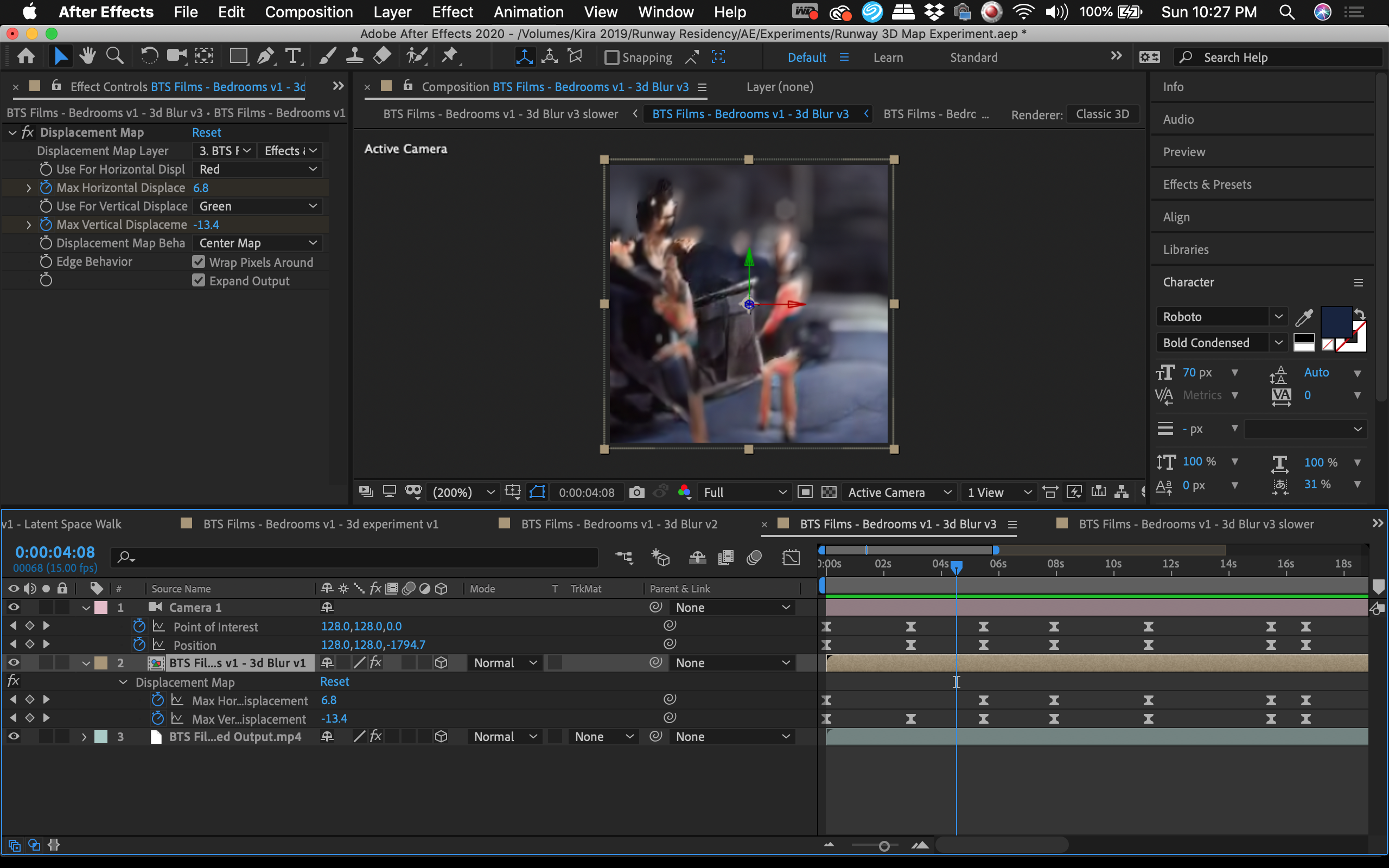

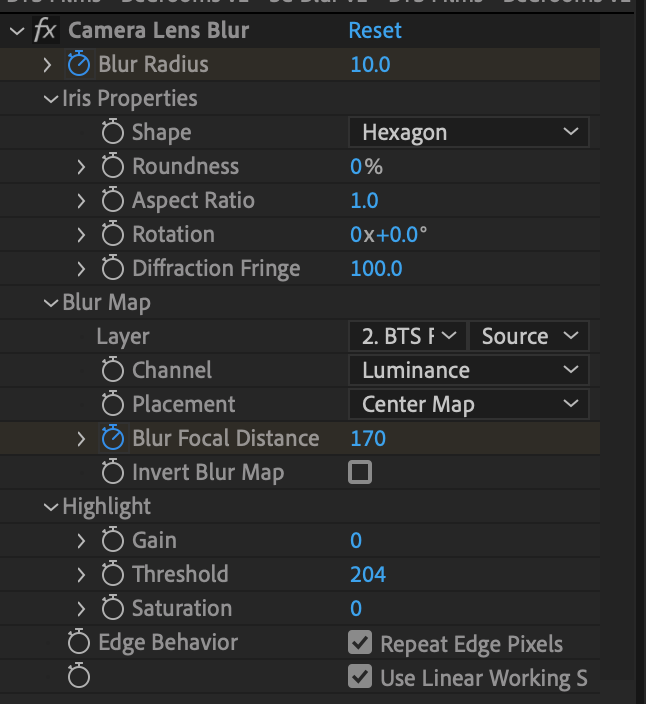

I brought the MIDAS depth map videos and latent spacewalks into Adobe After Effects and I animated a 3D camera blur utilizing the information from the depth map to make the video (slightly) less disorienting and help lead the viewer’s eye to specific elements.

Next I created a displacement map utilizing the depth map video. I animated the horizontal and vertical displacement as well as a digital camera to heighten the sense of depth and movement to the scenes. I utilized this particular technique for the first nightmare which I ended up speeding up in the final edit — so you can’t really see the results of this particular technique in the final film.

Then I overlaid dust, film grain and other effects that helped make the edges crisper. The bedroom-trained latent spacewalks are set to export at 250x250 pixels… so I decided to fully embrace the lofi, degraded quality by applying effects to give an overall cohesive and purposeful look to the whole film.

Faces floating in scenes:

I pulled a clip from the set design / bedroom latent spacewalk. I then slowed it down and looped it to create a morphing space as a background. I took one of the First Order Motion faces and composited it floating in the scene.

Dream Spaces:

In AE I took the bedroom / set latent spacewalk and created “rooms” side by side. I then composited the animation of the character on top + animated a 3D camera to give the illusion of the character walking by all of these rooms.

For the sleeping scenes: I constructed the 3D room in After Effects by using the set design / bedroom latent spacewalk as all of the walls and bed.

In Conclusion…

Wow, I learned so much from making this film! I tried so many new techniques. Some particular highlights: The latent spacewalks that you can generate from your own models are incredible. I can see so many uses for this. The ability to create such striking, original visuals with such ease… it’s inspiring.

I’ve never worked with depth maps before, but MIDAS model is a game changer for me! The ability to create depth maps from videos so easily gets my mind going… I’m excited to see how I can apply it in the future.

I can imagine the Runway application will be useful and exciting for many kinds of artists: filmmakers, visual artists, VJs, animators, etc… I may create a Skillshare course soon specifically geared towards less-technical creatives who are interested in generating original and unique machine learned visuals within Runway.

Thanks again to the Runway team for their contributions to the world of crazy creative possibilities and for their support in this filmmaking process.

I hope you enjoyed the film and this post! If you have any questions, want to say hello or are interested in collaborating, please feel free to follow my work and reach out:

Email: kira@allaroundartsy.com

Website: www.allaroundartsy.com

Instagram: @allaroundartsy

YouTube: https://www.youtube.com/c/kiraburskyfilms