Introspections

My name is Philipp Schmitt. I am an artist and designer based in Brooklyn. I had the tremendous pleasure and privilege to join Runway’s founding team of three as the first Something-in-Residence.

During my residency, I built a prototype integration of Runway in Adobe Photoshop — to experiment, speculate, and study how AI might affect creative tools. I experimented with using GAN-generated images in graphic design and adding a neural-net-based masking function as a potential alternative to Adobe’s own, proprietary code.

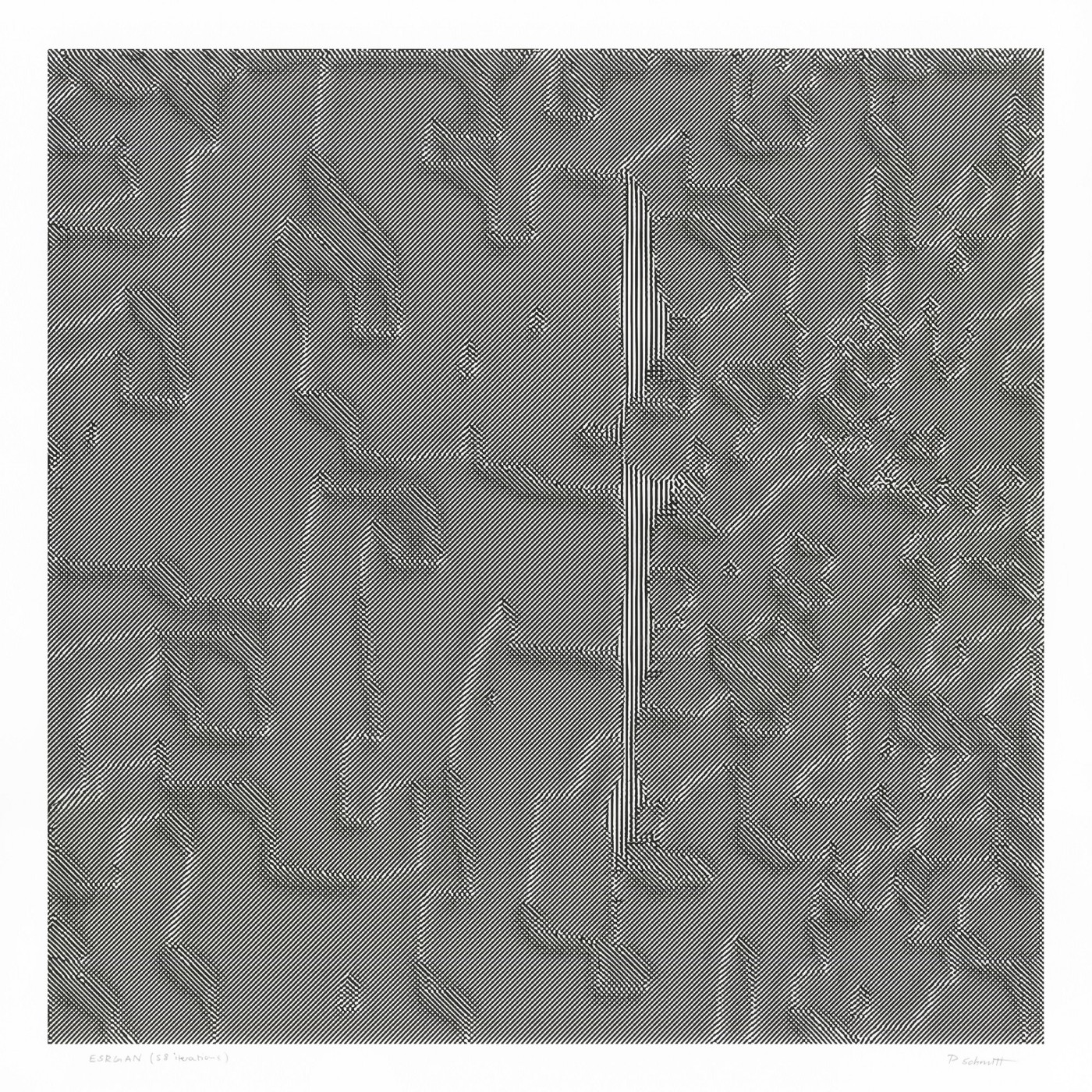

Most fascinating for me personally was pushing Runway’s machine learning models to produce unexpected results by feeding them irregular inputs: ESRGAN, for example, is a super-resolution model intended for upscaling photographs without them getting blurry (which works okay-ish at best).

But generate a patch of random noise in Photoshop, use that as input, and the model produces a nice texture reminiscent of a composition notebook.

New techniques and workflows can be discovered by connecting models together. Creative professionals spend a lot of time on tedious and uninteresting tasks. AI will help by better understanding their problems and offering faster solutions: (4/8) pic.twitter.com/X0KA2lyByy

— Runway (@runwayml) April 5, 2019

Having access to Runway in Photoshop allows to experiment with the latest machine learning models intuitively — in a tool creatives are already using on a daily basis. It enables creators without deep technical experience to discover new aesthetics, approaches, and tools instead of leaving everything to the vendor. Click on this twitter thread to see some of my experiments:

For the last few weeks, our Something in Residence @philippschmitt has been researching, speculating and studying how AI will affect design processes. Here are some thoughts and a sneak peak on some early stage experiments using @runwayml and @photoshop (Thread👇)

— Runway (@runwayml) April 5, 2019

Introspections

My recent work is focussed on the notion of opacity in AI: that even the creators of ML models don’t fully understand them, unable to visualize the full breath of what constitutes this artificial intelligence.

Real-time, easy access to ML through Runway is useful to figure out what this technology might be used for. Perhapsequally importantly, it also makes it easier to probe AI itself. Through experimentation with ML models in Photoshop, I made a discovery:

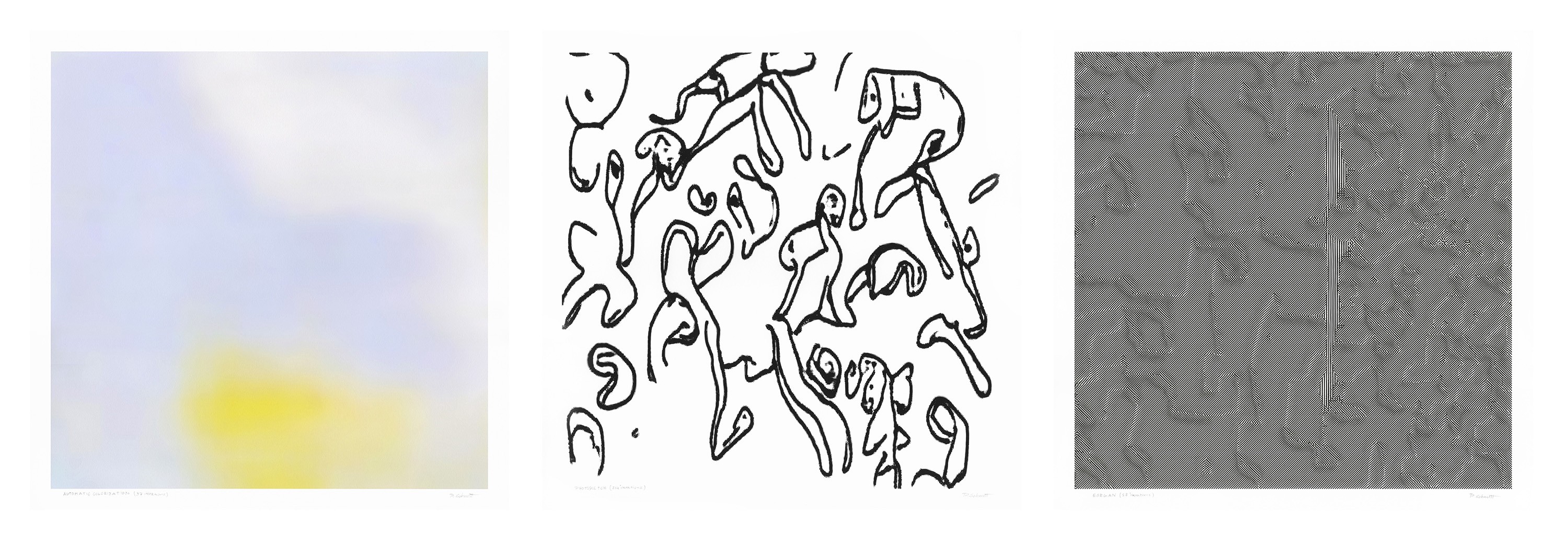

Sending a blank canvas to a machine learning model meant to process photos returns … a blank image. But repeat the action over and over and the model starts to introduce its own artifacts in a feedback loop. Subtle at first, the model ultimately devours the image, creating an abstract visualization of its own inner state and architecture. An introspection made visible, the piece materializes a glimpse into the opaque, high-dimensional vector spaces in which AI makes its meaning.

To me, the images read as desires: A model for Automatic Colorization reminds of looking up into the blue sky on a sunny day, eyes half-closed, after gray winter months.

ESRGAN, a super-resolution model, appears to have zoomed to nano-level of an undefined material; or looking down at a mountainous landscape from a bird’s eye view.

And PhotoSketch, a model that turns photos into sketches, seems to have drawn a crowd of people, dancing perhaps.

I would like to thank my friends at Runway for the opportunity to spend the Spring together thinking, making, eating. It has been inspiring to be a part of this young company’s early steps and trajectory. Thank you — for your work democratizing access to emerging tech and for supporting artists from day one.